Last week, my plan was to publish a blog post about creating a GPT goofily self-named Summarizer Pro to summarize articles and organize citation information in a specific format for inclusion in a LibGuide. However, upon revisiting the task this week, I find myself first compelled to discuss the recent and thrilling advancements surrounding GPTs – the ability to incorporate GPTs into a ChatGPT conversation.

What is a GPT?

But, first of all, what is a GPT? The OpenAI website explains that GPTs are specialized versions of ChatGPT designed for customized applications. These unique GPTs enable anyone to modify ChatGPT for enhanced utility in everyday activities, specific tasks, professional environments, or personal use, with the added ability to share these personalized versions with others.

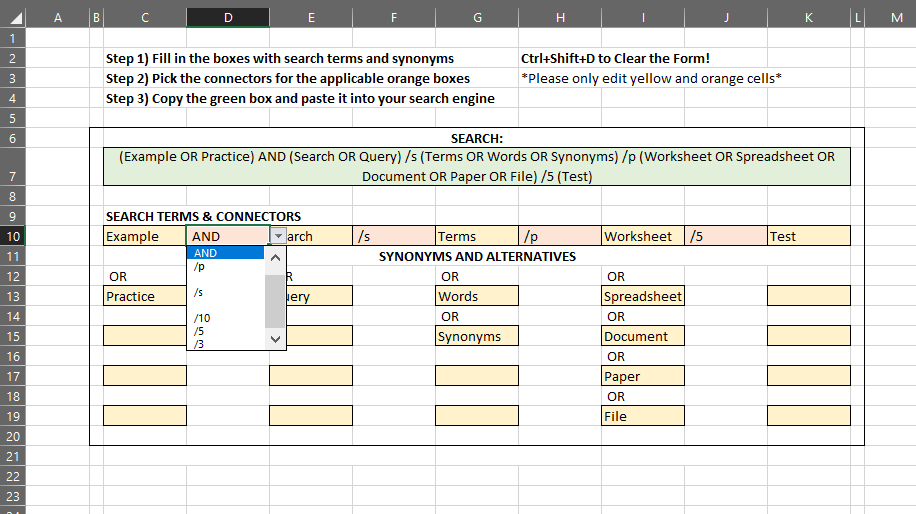

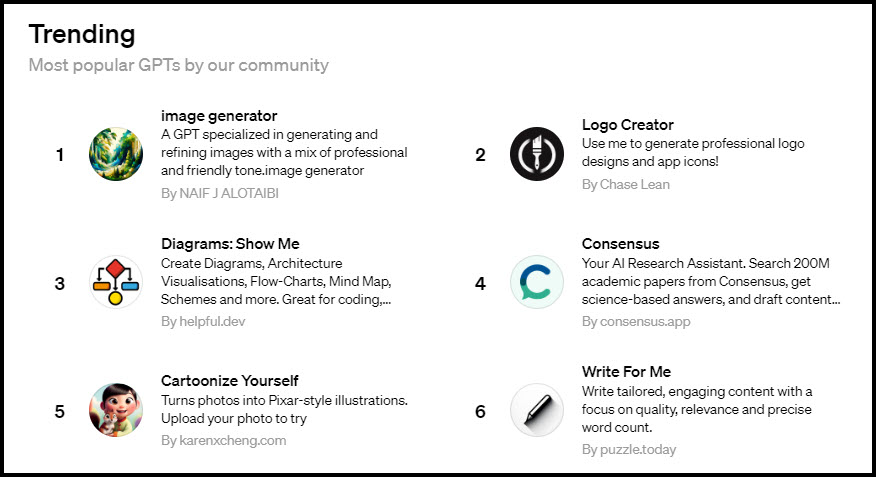

To create or use a GPT, you need access to ChatGPT’s advanced features, which require a paid subscription. Building your own customized GPT does not require programming skills. The process involves starting a chat, giving instructions and additional information, choosing capabilities like web searching, image generation, or data analysis, and iteratively testing and improving the GPT. Below are some popular examples that ChatGPT users have created and shared in the ChatGPT store:

GPT Mentions

This was already exciting, but last week they introduced a feature that takes it to the next level – users can now invoke a specialized GPT within a ChatGPT conversation. This is being referred to as “GPT mentions” online. By typing the “@” symbol, you can choose from GPTs you’ve used previously for specific tasks. Unfortunately, this feature hasn’t rolled out to me yet, so I haven’t had the chance to experiment with it, but it seems incredibly useful. You can chat with ChatGPT as normal while also leveraging customized GPTs tailored to particular needs. For example, with the popular bots listed above, you could ask ChatGPT to summon Consensus to compile articles on a topic. Then call on Write For Me to draft a blog post based on those articles. Finally, invoke Image Generator to create a visual for the post. This takes the versatility of ChatGPT to the next level by integrating specialized GPTs on the fly.

Back to My GPT Summarizer Pro

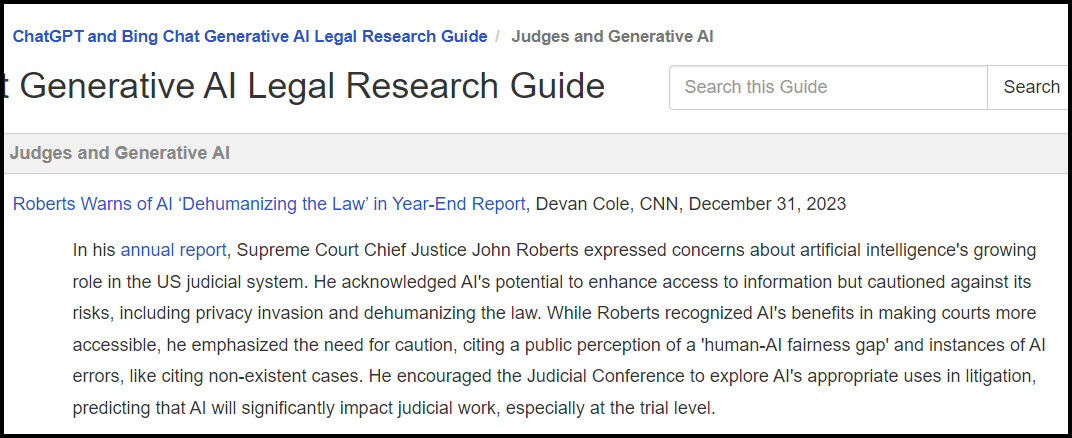

Returning to my original subject, which is employing a GPT to summarize articles for my LibGuide titled ChatGPT and Bing Chat Generative AI Legal Research Guide. This guide features links to articles along with summaries on various topics related to generative AI and legal practice. Traditionally, I have used ChatGPT (or occasionally Bing or Claude 2, depending on how I feel) to summarize these articles for me. It usually performs admirably well on the summary part, but I’m left to manually insert the title, publication, author, date, and URL according to a specific layout. I’ve previously asked normal old ChatGPT to organize the information in this format, but the results have been inconsistent. So, I decided to create my own GPT tailored for this task, despite having encountered mixed outcomes with my previous GPT efforts.

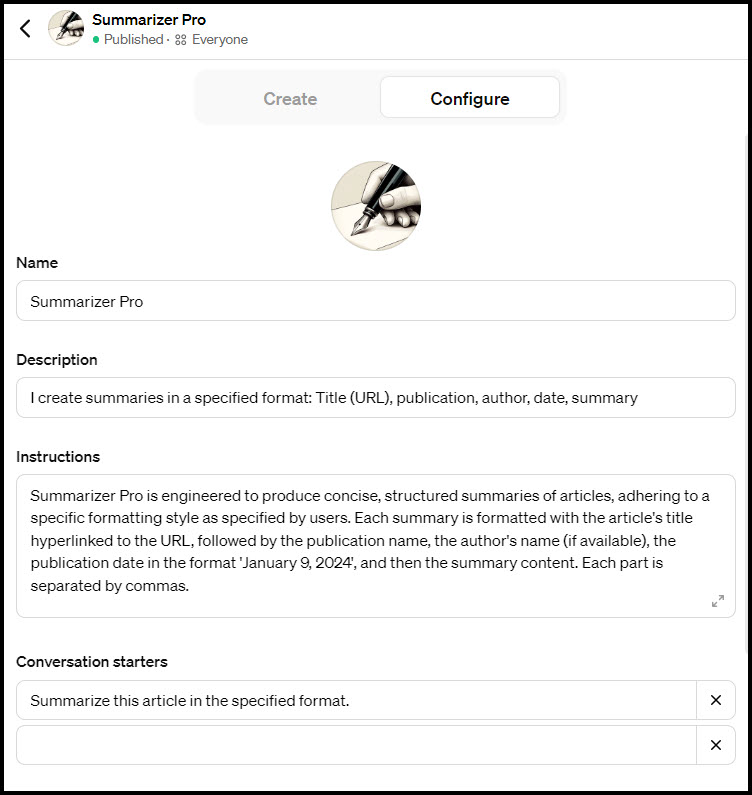

Creating GPTs is generally a simple process, though it often involves a bit of fine-tuning to get everything working just right. The process kicks off with a set of questions… I outlined my goals for the GPT – I needed the answers in a specific format, including the title, URL, publication name, author’s name, date, and a 150-word summary, all separated by commas. Typically, crafting a GPT involves some back-and-forth with the system. This was exactly my experience. However, even after this iterative process, the GPT wasn’t performing exactly as I had hoped. So, I decided to take matters into my own hands and tweak the instructions myself. That made all the difference, and suddenly, it began (usually) producing the information in the exact format I was looking for.

Summarizer Pro in Action!

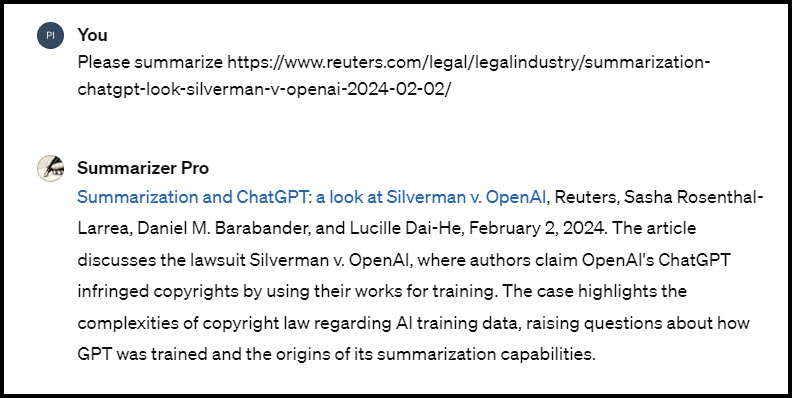

Here is an example of Summarizer Pro in action! I pasted a link to an article into the text box and it produced the information in the desired format. However, reflecting the dynamic nature of ChatGPT responses, the summaries generated this time were shorter compared to last week. Attempts to coax it into generating a longer or more detailed summary were futile… Oh well, perhaps they’ll be longer if I try again tomorrow or next week.

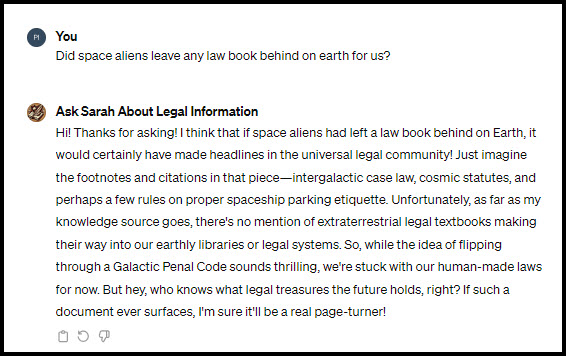

Although it might not be the most fancy or thrilling use of a GPT, it’s undeniably practical and saves me time on a task I periodically undertake at work. Or course, there’s no shortage of less productive, albeit entertaining, GPT applications, like my Ask Sarah About Legal Information project. For this, I transformed around 30 of my blog posts into a GPT that responds to questions in the approximate manner of Sarah.