A few months ago, a law professor posted on Twitter about a hallucination he observed in Lexis+ AI. He asked “What cases have applied Students for Fair Admissions, Inc. v. Harvard College to the use of race in government decisionmaking?” The answer from Lexis+ AI included two hallucinated cases. (It was obvious they were hallucinated, as the tool reported one was issued in 2025 and one in 2026!)

Lexis responded, stating this was an anomalous result, but that only statements with links can be expected to be hallucination-free, and that “where a citation does not include a link, users should always review the citation for accuracy.”

Why is this happening?

If you’ve been following this blog, you’ve seen me write about retrieval-augmented generation, one of the favorite techniques of vendors to reduce hallucinations. RAG takes the user’s question and passes it (perhaps with some modification) to a database. The database results are fed to the model, and the model identifies relevant passages or snippets from the results, and again sends them back into the model as “context” along with the user’s question.

However, as I said then, RAG cannot eliminate hallucinations. RAG will ground the response in real data (case law, pulled from the database and linked in the response), but the generative AI’s summary of that real data can still be off.

Another example – Mata v. Avianca is back

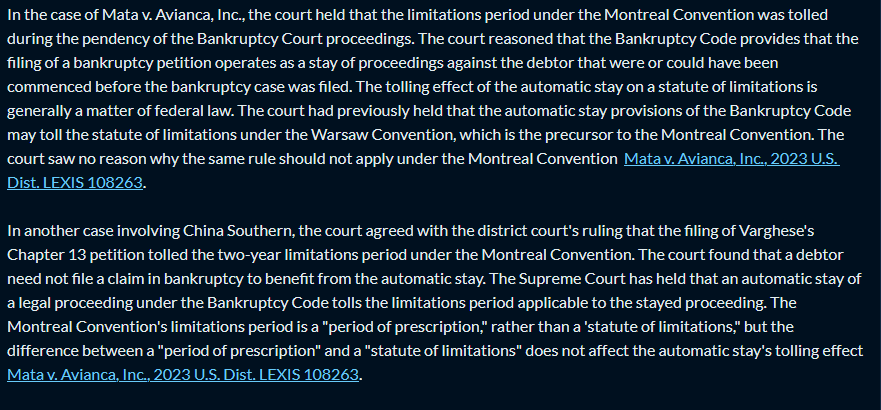

I’ve observed this myself when working with Lexis+ AI. For example, I asked Lexis+ AI a fairly complex question at the intersection of bankruptcy law and international law: “Draft an argument that federal bankruptcy stay tolls the limitations period for a claim under the Montreal Convention”.

Lexis+ AI returned a summary of the law, citing Mata v. Avianca for the point that “the filing of a bankruptcy petition can toll the Montreal Convention’s two year limitations period, which does not begin to run until the automatic stay is lifted.”

![The image contains a screenshot of a text document, which seems to be an interface from a legal database or research platform with an option to "Select Jurisdiction" at the top right corner. The document reads as follows:

Question: Draft an argument that federal bankruptcy stay tolls the limitations period for a claim under the Montreal Convention

Response:

The Montreal Convention's limitations period can be tolled during the pendency of bankruptcy proceedings. This is based on the Bankruptcy Code, which states that the filing of a bankruptcy petition operates as a stay of proceedings against the debtor that were or could have been commenced before the bankruptcy case was filed. The tolling effect of the automatic stay on a statute of limitations is generally a matter of federal law. The automatic stay provisions of the Bankruptcy Code may toll the statute of limitations under the Montreal Convention, as they have done under the Warsaw Convention, which is the precursor to the Montreal Convention. The Supreme Court has held that an automatic stay of a legal proceeding under the Bankruptcy Code tolls the limitations period applicable to the stayed proceeding. Therefore, the filing of a bankruptcy petition can toll the Montreal Convention's two-year limitations period, which does not begin to run until the automatic stay is lifted [Mata v. Avianca, Inc., 2023 U.S. Dist. LEXIS 108263]. However, it's worth noting that there is a split of authority on this issue. Some courts have held that the Montreal Convention's two-year period is a strict condition precedent to the bringing of a claim, as opposed to a limitations period. Under this view, which does not treat tolling principles Rodriguez v. Asa, 2023 U.S. Dist. LEXIS 148451, Mata v. Avianca, Inc. 2023 U.S. Dist. LEXIS 108261, Kasalyn v. Delta Air Lines, Inc., 2023 U.S. Dist. LEXIS 154302.](https://www.ailawlibrarians.com/wp-content/uploads/2024/03/image-1-1024x563.png)

If the case name Mata v. Avianca sounds familiar to you, it’s probably because this is the case that landed two New York attorneys on the front page of the New York Times last year for citing hallucinated cases. The snippet from Lexis+ AI, though citing Mata, in fact appears to be summarizing those hallucinated cases (recounted in Mata), which stated the law exactly backwards.

When to beware

A few things to notice about the above examples, which give us some ideas of when to be extra-careful in our use of generative AI for legal research.

- Hallucinations are more likely when you are demanding an argument rather than asking for the answer to a neutrally phrased question. This is what happened in my Lexis+ AI example above, and is actually what happened to the attorneys in Mata v. Avianca as well – they asked for an argument to support an incorrect proposition of law rather than a summary of law. A recent study of hallucinations in legal analysis found that these so-called contra-factual hallucinations are disturbingly common for many LLM models.

- Hallucinations can occur when the summary purports to be of the cited case, but is actually a summary of a case cited within that case (and perhaps not characterized positively). You can see this very clearly in further responses I got summarizing Mata v. Avianca, which purport to be summarizing a “case involving China Southern” (again, one of the hallucinated cases recounted in Mata).

- Finally, hallucinations are also more likely when the model has very little responsive text to go on. The law professor’s example involved a recent Supreme Court case that likely had not been applied many times. Additionally, Lexis+ AI does not seem to work well with questions about Shepard’s results – it may not be connected in that way yet. So, with nothing to really go on, it is more prone to hallucination.

Takeaway tips

A few takeaway tips:

- Ask your vendor which sources are included in the generative AI tool, and only ask questions that can be answered from that data. Don’t expect generative AI research products to automatically have access to other data from the vendor (Shepard’s, litigation analytics, PACER, etc.), as that may take some time to implement.

- Always read the cases for yourself. We’ve always told students not to rely on editor-written headnotes, and the same applies to AI-generated summaries.

- Be especially wary if the summary refers to a case not linked. This is the tip from Lexis, and it’s a good one, as it can clue you in that the AI may be incorrectly summarizing the linked source.

- Ask your questions neutrally. Even if you ultimately want to use the authorities in an argument, better to get a dispassionate summary of the law before launching into an argument.

A disclaimer

These tools are constantly improving and they are very open to feedback. I was not able to reproduce the error recounted in the beginning of this post; the error that created it has presumably been addressed by Lexis. The Mata v. Avianca errors still remain, but I did provide feedback on them, and I expect they will be corrected quickly.

The purpose of this post is not to tell you that you should never use generative AI for legal research. I’ve found Lexis+ AI helpful on many tasks, and students especially have told me they find it useful. There are several other tools out there that are worth evaluating as well. However, we should all be aware that these hallucinations can still happen, even with systems connected to real cases, and that there are ways we can interact with the systems to reduce hallucinations.

It’s not just hallucinations we need to worry about. My testing has shown generative AI systems for law or in general don’t do well with statutes. In one instance a popular AI tool cited an old version of a statute and got the wrong answer. I wonder if generative AI handles statutes or regulations well at all. I love the new tools, but skepticism is in order.

Absolutely, Paul. The systems don’t seem great at handling anything that requires looking around a bit (whether at neighboring sections of a statute/ regulation, or at a case’s history or citing references). Also not great at weighing/reconciling authorities with different persuasiveness. Maybe it will be possible at some point, but not yet.

Pingback: Evaluating Generative AI for Legal Research: A Benchmarking Project - AI Law Librarians